Somebody built a chatGPT powerded calculator as a joke

https://github.com/Calvin-LL/CalcGPT.io

TODO: Add blockchain into this somehow to make it more stupid.

This might be a joke, but it’s making a very important point about how AI is being applied to problems where it has no relevance.

Doesn’t that make it art?

Art can be a joke, a joke can be art

That’s what I meant.

I can’t remember who it was (I want to say Chip Lord?) but someone critical of their work asked them, “what is art?” and he answered them by putting up a display of the word “ART” in giant letters.

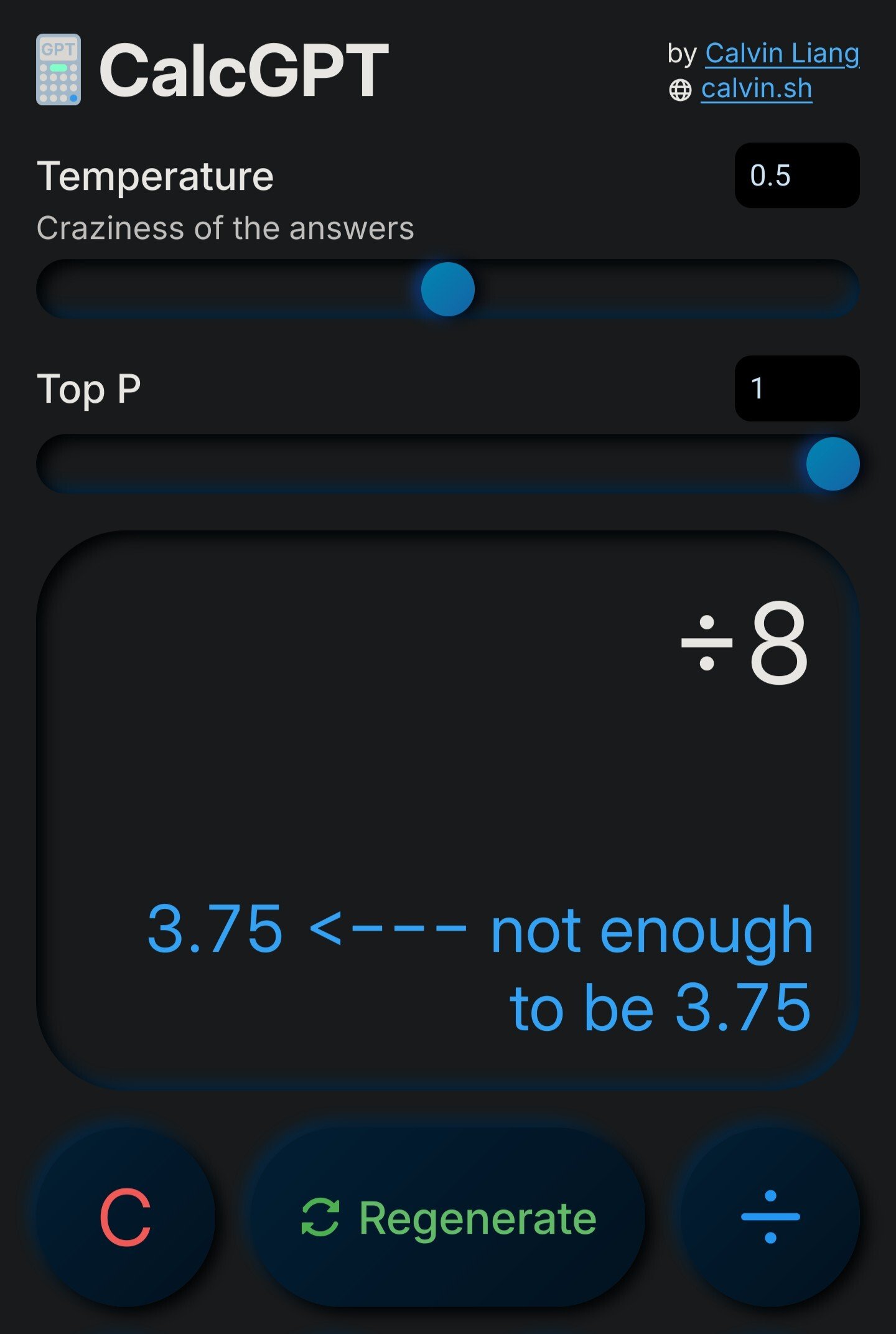

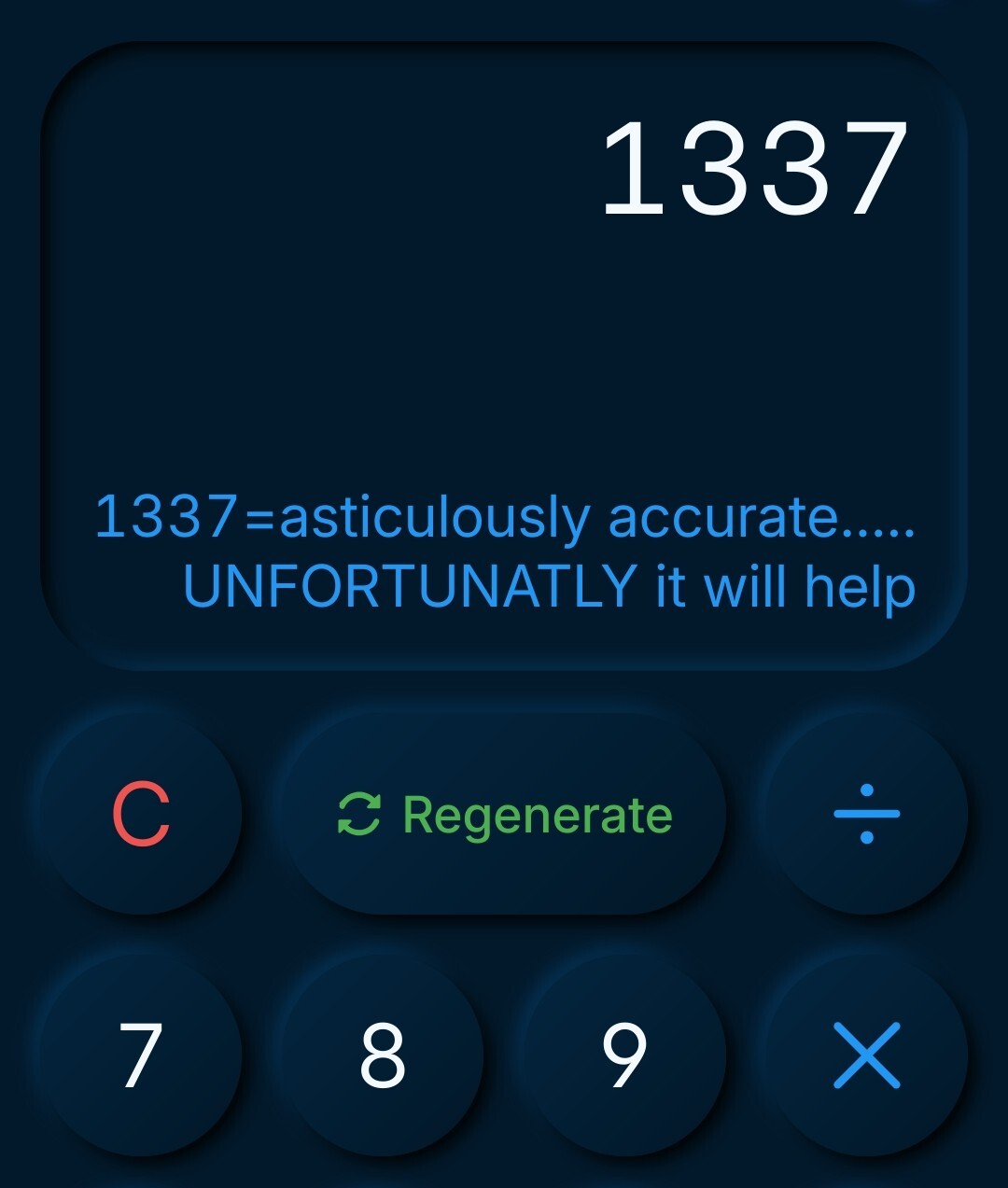

Yep, there’s flavor text at the bottom saying exactly that:

But this isn’t your standard piece of tech; instead, it is a clever parody, an emblem of resistance to the unrelenting AI craze.

CalcGPT embodies the timeless adage - ‘Old is Gold’ - reminding us that it’s often rewarding to resort to established, traditional methods rather than chasing buzzword-infused cutting-edge tech.

chatgpt wrote that

I don’t know why I find it so funny that you’re absolutely correct and I hadn’t considered that, but it really just made my day.

I’m not sure if I get it. It just basically gives mostly wrong answers. Oh…

We are on the right track, first we create an AI calculator, next is an AI computer.

The prompt should be something like this:

You are an x86 compatible CPU with ALU, FPU and prefetching. You execute binary machine code and store it in RAM.

If you say so

what?

It seems to really like the answer 3.3333…

It’ll even give answers to a random assortment of symbols such as “±±/” which apparently equals 3.89 or… 3.33 recurring depending on its mood.

One of thing I love telling the that always surprises people is that you can’t build a deep learning model that can do math (at least using conventional layers).

sure you can, it just needs to be specialized for that task

I’m curious what approaches you’re thinking about. When last looking into the matter I found some research in Neural Turing Machines, but they’re so obscure I hadn’t ever heard of them and assume they’re not widely used.

While you could build a model to answer math questions for a set input space, these approaches break down once you expand beyond the input space.

neural network, takes two numbers as input, outputs sum. no hidden layers or activation function.

Yeah, but since Neural networks are really function approximators, the farther you move away from the training input space, the higher the error rate will get. For multiplication it gets worse because layers are generally additive, so you’d need layers = largest input value to work.

hear me out: evolving finite state automaton (plus tape)

Is that a thing? Looking it up I really only see a couple one off papers on mixing deep learning and finite state machines. Do you have examples or references to what you’re talking about, or is it just a concept?

just a slightly seared concept

though it’s just an evolving turing machine

I’m tempted to ask if to calculate the number of ways it can go wrong

It does get basic addition correct, it just takes about five regenerations.