This started as a summary of a random essay Robert Epstein (fuck, that’s an unfortunate surname) cooked up back in 2016, and evolved into a diatribe about how the AI bubble affects how we think of human cognition.

This is probably a bit outside awful’s wheelhouse, but hey, this is MoreWrite.

The TL;DR

The general article concerns two major metaphors for human intelligence:

- The information processing (IP) metaphor, which views the brain as some form of computer (implicitly a classical one, though you could probably cram a quantum computer into that metaphor too)

- The anti-representational metaphor, which views the brain as a living organism, which constantly changes in response to experiences and stimuli, and which contains jack shit in the way of any computer-like components (memory, processors, algorithms, etcetera)

Epstein’s general view is, if the title didn’t tip you off, firmly on the anti-rep metaphor’s side, dismissing IP as “not even slightly valid” and openly arguing for dumping it straight into the dustbin of history.

His main major piece of evidence for this is a basic experiment, where he has a student draw two images of dollar bills - one from memory, and one with a real dollar bill as reference - and compare the two.

Unsurprisingly, the image made with a reference blows the image from memory out of the water every time, which Epstein uses to argue against any notion of the image of a dollar bill (or anything else, for that matter) being stored in one’s brain like data in a hard drive.

Instead, he argues that the student making the image had re-experienced seeing the bill when drawing it from memory, with their ability to do so having come because their brain had changed at the sight of many a dollar bill up to this point to enable them to do it.

Another piece of evidence he brings up is a 1995 paper from Science by Michael McBeath regarding baseballers catching fly balls. Where the IP metaphor reportedly suggests the player roughly calculates the ball’s flight path with estimates of several variables (“the force of the impact, the angle of the trajectory, that kind of thing”), the anti-rep metaphor (given by McBeath) simply suggests the player catches them by moving in a manner which keeps the ball, home plate and the surroundings in a constant visual relationship with each other.

The final piece I could glean from this is a report in Scientific American about the Human Brain Project (HBP), a $1.3 billion project launched by the EU in 2013, made with the goal of simulating the entire human brain on a supercomputer. Said project went on to become a “brain wreck” less than two years in (and eight years before its 2023 deadline) - a “brain wreck” Epstein implicitly blames on the whole thing being guided by the IP metaphor.

Said “brain wreck” is a good place to cap this section off - the essay is something I recommend reading for yourself (even if I do feel its arguments aren’t particularly strong), and its not really the main focus of this little ramblefest. Anyways, onto my personal thoughts.

Some Personal Thoughts

Personally, I suspect the AI bubble’s made the public a lot less receptive to the IP metaphor these days, for a few reasons:

- Articial Idiocy

The entire bubble was sold as a path to computers with human-like, if not godlike intelligence - artificial thinkers smarter than the best human geniuses, art generators better than the best human virtuosos, et cetera. Hell, the AIs at the centre of this bubble are running on neural networks, whose functioning is based on our current understanding of how the brain works. [Missed this incomplete sensence first time around :P]

What we instead got was Google telling us to eat rocks and put glue in pizza, chatbots hallucinating everything under the fucking sun, and art generators drowning the entire fucking internet in pure unfiltered slop, identifiable in the uniquely AI-like errors it makes. And all whilst burning through truly unholy amounts of power and receiving frankly embarrassing levels of hype in the process.

(Quick sidenote: Even a local model running on some rando’s GPU is a power-hog compared to what its trying to imitate - digging around online indicates your brain uses only 20 watts of power to do what it does.)

With the parade of artificial stupidity the bubble’s given us, I wouldn’t fault anyone for coming to believe the brain isn’t like a computer at all.

- Inhuman Learning

Additionally, AI bros have repeatedly and incessantly claimed that AIs are creative and that they learn like humans, usually in response to complaints about the Biblical amounts of art stolen for AI datasets.

Said claims are, of course, flat-out bullshit - last I checked, human artists only need a few references to actually produce something good and original, whilst your average LLM will produce nothing but slop no matter how many terabytes upon terabytes of data you throw at its dataset.

This all arguably falls under the “Artificial Idiocy” heading, but it felt necessary to point out - these things lack the creativity or learning capabilities of humans, and I wouldn’t blame anyone for taking that to mean that brains are uniquely unlike computers.

- Eau de Tech Asshole

Given how much public resentment the AI bubble has built towards the tech industry (which I covered in my previous post), my gut instinct’s telling me that the IP metaphor is also starting to be viewed in a harsher, more “tech asshole-ish” light - not just merely a reductive/incorrect view on human cognition, but as a sign you put tech over human lives, or don’t see other people as human.

Of course, AI providing a general parade of the absolute worst scumbaggery we know (with Mira Murati being an anti-artist scumbag and Sam Altman being a general creep as the biggest examples) is probably helping that fact, alongside all the active attempts by AI bros to mimic real artists (exhibit A, exhibit B).

RE: the ip perspective on brains,

From my perspective, science is catching up on this - in pockets. It goes under a lot of different names, and to be honest it’s be around in many forms for a long time. I highly, highly recommend catching up on Dr. Michael Levin and related work on this. There is still levels of speculation here, but there’s hard science and empirical observation that broadly, the neuro story on memory synapses doesn’t work. The alternative, that nearly every part of the body is both capable of independent problem solving and memory, puts actionable medical alternatives on the table.

The long story is that memory appears entirely to be opportunistic. Memory can and is stored in virtually everything that a body gets access to, internally AND externally. The brain’s main function is to re-imagine and reinterpret memory, not to be dictated by it. A memory isn’t a fact in a broad sense, it’s a dynamic that acts on the body. This is different in many ways from the hard division we try to make in modern computer design (although I’d argue that even the difference between memory and instruction in von neuman design continues to fall apart over time).

That said, and i realize this is semantic linguistic issue, but I do believe brains are computers, but only in the broadest sense of what computation could be, not in the highly specific sense of them being digital von neuman devices. What’s often missed in discussion about a computational world view is being clear to the reader that there is no privileged sort of computation. There’s nothing at all special or privileged about what our digital von neuman machines are, other than in a sense them being metabolisms that are functionally different than us.

When I express that brains are computers, I like to add things like, “in the sense that dance is computation, or politics is economics, or matter is experience, or money is culture.” Which is to say they can be different and yet the same, depending on entirely the perspective of what you mean by something “being” anything at all. (It’s similar to why ontologies are both useful but always wrong).

The bitter truth though is that I don’t think there is anything privileged about the human brain either – but that doesn’t come in the sense of there being no difference. I think quite the opposite, seeing many of /the other things/ as being capable, but being in its own sorts of attendance and meaning, provides much more rich questions of “why are we different, then?” Certainly more than presuming that it is capability of any particular thing that separates us from the other things.

Ultimately, I love artists because I want an ecosystem of art and artists and art admirers, and because I think respect should transcend form, not seek reductively the most commoditized realization of it.

In many ways… isn’t this what indigenous cultures already more or less believed?

but I do believe brains are computers, but only in the broadest sense of what computation could be

Agree. A human brain is capable of executing the steps of a TM with pen/paper, and in that sense the brain is absolutely capable of acting as a computer. But as far as all the other process a brain does (breathing/maintaining heart rate/etc.) describing that as ‘a computer’ seems such an abuse of notation as to render the original definition meaningless. We might as well call the moon a computer since it is ‘calculating’ the effect of a gravitational field on a moon sized object. What I think many people are really claiming when they say a brain is a computer is that if only we could identify the correct finite state deterministic program, there would be no difference between the brain and its implementation in silicon. Personally, I find claims of substrate independence to be less plausible, but of course many of our dear friends are willing to bite that bullet.

We might as well call the moon a computer since it is ‘calculating’ the effect of a gravitational field on a moon sized object.

Yes. In fact, that’s sort of my point. There is no privileged sense of computation. They can be different even if they do, have invariants.

But as far as all the other process a brain does (breathing/maintaining heart rate/etc.) describing that as ‘a computer’ seems such an abuse of notation as to render the original definition meaningless.

I tend to agree that often times, the terminology of ‘attendance’ is better than the terminology of computation, but I don’t think that there isn’t -any- meaning in keeping the computer metaphor, because I do think it has practical implications.

At the risk of going down another rabbit hole, I’d really say that the Free Energy Principle does a pretty good job of showing why keeping a wide, but nonetheless useful, definition of computation on the table can, be useful. As in, a principled tool that can shed some light on scale free dynamics (and not in a absolute, definitive answer to all questions).

https://www.youtube.com/watch?v=KQk0AHu_nng

Maybe another reason I’m ok with the computer metaphor (in which we retain the lack of privelege, and in which the attendance metaphor is kept), is that it does sort provide us some interesting technical intuitions, too. Like, how the maximum power principle effects the design and building of technology of all kinds (whether it’s chemistry, electronics, energy, gardening) , how ambiguity (that is, the unknowable embedded environment) is an important functional element of deploying any sort of technology (or policy, or behavior), and how, yeah.

One day, the fact that simple and even slow things (like water, or the moon, or chemicals, or rocks, or animals) are capable computationally, but attend to different things, is in fact. Going to be meaningful and important.

i dunno, this seem to me to lead in a straight line to Chalmers claiming rocks could be conscious you can’t prove they’re not.

sure you can expand “computation” to things outside Turing, but then you’re setting yourself up for equivocation

I definitely don’t claim anything about consciousness. But I also don’t think think things have to be conscious to be interesting, or for me to care about them.

Hell, my mom is dead, and definitely not conscious. But I still think about her and care about her. And my memories of her, still impact my life and behavior in strange ways.

I get where you’re coming from, and I’m not trying to make normalizing reductive claims that things -are the same-. But things that are different by some means can also share things by other. I think it is a useful perspective to have.

Computation and computer metaphors are helpful, atleast to my thinking. But even I don’t argue that it’s a privileged position. Lots of words and metaphors can work.

I wouldn’t take Chalmers’ opinions on things that seriously. Chalmers is a metaphysical realist, a very dubious philosophical position, and thus all his positions are inherently circular.

Metaphysical realism presumes dualism from the get-go, that there is some fundamental gap between an unobservable “objective” reality beyond everything we can ever hope to perceive, and then everything we do perceive is some not real and a unique property associated with mammalian brains. To be not real suggests it is outside of reality, that it somehow transcends reality.

This was what Thomas Nagel argued in his famous paper “What is it like to be a Bat?” and then Chalmers merely cites this as the basis for saying the brain has a property that transcends reality, and then concludes if explaining the function of the brain (what he calls the “easy problem”) is not enough to explain this transcendence, how is it that an entirely invisible reality gives rise to the reality we observe.

But the entire thing is circular, as there’s no convincing justification the brain transcends reality in the first place, and you only run into this “hard problem” if you presume such a transcendence takes place. Bizarrely, idealists and dualists love to demand that people who are not convinced that this transcendental “consciousness” even exists have to solve the “hard problem” or idealism and dualism are proven. But it’s literally the opposite: idealism and dualism (as well as metaphysical materialism) are entirely untenable positions until they solve the philosophical problem their position creates.

I am especially not going to be convinced that this transcendental consciousness even exists if, as Chalmers has shown, it leads to “hard” philosophical paradoxes. Metaphysical realists for some reason don’t see their philosophy leading to a massive paradox as a reason for questioning its foundations, but then turn around and insist reality itself must be inherently paradoxical, that there really is a fundamental gap between mind and body. Chalmers himself is a self-described dualist.

It’s from this basis that Chalmers says you cannot prove whether or not something is conscious, because for him consciousness is something transcendental that we can’t concretely tie back to anything demonstrably real. It has no tangible definition, there are no set of obervables associated with it. If you have one transcendentally conscious person next to another non-transcendentally conscious person, Chalmers would say that there is simply no conceivable observation you could ever make to distinguish between the two.

Yet, if there are no conceivable ways to distinguish the two, then this transcendental property of “consciousness” is just not conceivable at all. It’s a word without concrete meaning, a floating abstraction, and should not be taken particularly seriously. At least, not until Chalmers solves the hard problem of consciousness and proves his metaphysical realist worldview can be made internally consistent, only then will I take his philosophy as even worthy of consideration.

Well, we could argue that computers don’t really “compute,” either. What a computer does is measure the flow of electrons through a transistor, albeit billions of them. If the flow of electrons passes an arbitrary threshold on a certain transistor, then we call it a “1”. If it doesn’t, we call it a “0”. The “computation” is just us interpreting the flow of elections into something more useful. It was explained to me that the “threshold” was over and under 5 volts, but I think if you put 5 volts into a modern transistor it would just fry it.

Obviously, because our brains are made of cells instead of silicon transistors, we wouldn’t “compute” the same way a transistor does. If we decide that computation is only something that transistors can do, then obviously the brain couldn’t compute, but, for now, that line would be arbitrary.

This definitely reminds me of something I heard in the above video, which I think is super important. Like of course things like memory or computers are metaphors. But like, isn’t everything metaphors? To your point, the “computation” of a transistor is in fact our interpretation of an activity that obviously isn’t actually the thing we’re seeing it as. Even a von neuman machine isn’t actually, a turing machine – it has practical limitations that theoritical turing machines don’t!

But just because something or anything is a metaphor, doesn’t mean it isn’t useful. It’s just, incomplete.

Careful David, if you deny that rocks (and therefore the moon) are conscious, you might make them angry.

Fair enough!

The alternative, that nearly every part of the body is both capable of independent problem solving and memory

Talked to my therapist about this today. This has put to words something I’ve been feeling. Thank you for that bit of epistemological justice.

as everyone knows, the brain is very like a steam engine

what are you on? The brain is clearly a series of pipes that water flows through

a very moist internet, if you will

A useful metaphor perhaps, but more accurately the brain is an intricate series of clockwork gears and linkages that connect the machineries of the body to the levers and knobs of the soul.

your brain: disorganised screaming

my brain: chugga chugga chugga chugga chugga chugga chugga chugga choo choo

Guess that answers how many chuggas before a choo choo

His main major piece of evidence for this is a basic experiment, where he has a student draw two images of dollar bills - one from memory, and one with a real dollar bill as reference - and compare the two.

Unsurprisingly, the image made with a reference blows the image from memory out of the water every time, which Epstein uses to argue against any notion of the image of a dollar bill (or anything else, for that matter) being stored in one’s brain like data in a hard drive.

To be frank, it feels like I’m being told that the Riemann hypothesis is incorrect on the basis that 1 + 1 = 2

Sure, maybe brains don’t actually compute anything, but “Our memory is faulty” would be the first step in getting to the evidence, not the evidence itself, assuming “our memory is faulty” is even the direction we need to go.

It could easily be argued, with just as much evidence, that our brains prioritized efficiency over accuracy with its algorithms and that’s why it’s harder to recall an accurate image of a dollar bill.

I think a more likely takeaway is that the brain doesn’t remember a dollar bill as an image, but as, y’know, a dollar bill. The color, rough dimensions, picture of a dead president, and numbers in the corners were probably right, but it’s more important to remember the social, emotional, and cultural context of it (e.g. that money can be exchanged for goods and services) rather than the specific details of the anti theft patterns, which are intentionally designed to be “noise” and hard to replicate. In the IP metaphor, we could talk in terms of lossy compression of visual data.

And that’s why I think the IP metaphor is useful. It’s all well and good to talk about the brain as being fundamentally different from a computer, but in terms of discrete functions we know how computers work at a level we don’t for the brain, and so it’s useful to be able to analogize.

So basically my body has experienced the LLM bubble and those experiences smeared across my brain so that when I later consider if brains are computers my little grey cells remember all the bad experiences and revolt and make me think about cat-boys instead. Whoa, the math checks out!

r.e the ip vs organism point. I gotta admit I’m struggling with seeing how anyone could think people are like computers in the first place when we’re so obviously not. I had a bunch of jumbled thoughts written out, but they all really boiled down to this.

Like I understand that some people think computers and people are similar* but I don’t. I don’t understand it at all. Today computers are good at running electricity down wires really really fast to do stuff like execute x86_64 assembly language, while humans are way better at everything else **. And then there’s stuff like encephalitis and gut brain connection which are very organic things which can influence thinking. And then and then like (handwaves) computers don’t experience at all aahhhh

* There was that Google programmer who fell in love with LaMDA

** Arguably humans are also better at x86_64 assembly language than computers are, except for speed, given that: 1. we created it 2. if you gave us a block of assembly language with a bug somewhere in the middle we’d have a better chance of finding it. 3. We can edit it.

Same, I’m not quite sure what the argument is. Also, it seems that a lot of the AI hate comes from how capitalism uses it and hypes it. That is not the fault of the poor innocent AI!

I also don’t see how you could plausibly say that AI isn’t creative at all. If you’d take the least creative humans on earth and compare them to LLMs in some sort of designed experiment, I’m pretty sure the LLMs would win against some percentage of humanity. In the coming decades this percentage of “more creative than X percent of humans” will increase.

THAT is a deep insult to our collective human psyche. THAT is where I suspect a lot of AI hate comes from. A lot of the arguments against AI hype are just rationalizations that are ultimately the fault of capitalism or greedy stupid people (e.g. tech bros).

It also seems to me that LLMs represent creativity without general intelligence, and that fundamentally limits them. They can’t prompt themselves or understand what they created. Again, for now. What this shown is that if you throw enough data and computing power at the problem, it solves it just like nature did with natural selection. For now it’s only like one small portion of the human mind. Cobble together enough pieces we might be closer to AGI than we’d like. Some seem to view this thought as some sort of heresy.

It would be more important to argue for more ethical control of AI outside of profit motives and dogmatic views on copyright. We should develop “artificial ethics” first before we try to extract profit from the advances. The strong emotional reactions will only be exploited so the capitalists will get to control for the worst possible outcomes.

What do you mean here by “creativity”?

What specific definition or type of creativity doesn’t really matter (apparently there are 100s of definitions) as long as you could design a reasonable experiment to measure it in a double blind study.

For example ask a 1000 random humans and a LLM to write a poem about some random topic and then have a 1000 English teachers grade them for creativity. I would expect a percentage of humans to persistently score lower for creativity than LLMs.

PS: That doesn’t meant they wouldn’t be garbage poems. Maybe you would need a control sample of basically random words that are still grammatically correct sentences / poems.

PPS: There is already some thought about computational creativity

as long as you could design a reasonable experiment to measure it in a double blind study

no

Creativity is an inner mental process, not a content creation machine. The output is irrelevant.

Yeah an inner mental process that has been replicated - well to a (very) limited degree. My argument is that this is exactly what is so offensive to people. The painful realization or disillusionment that one of the things we held most special about being human, turns out is not so special after all. I never would have predicted that before GPT-3. I look at movies or stories with a character that is an artistic archetype differently now.

Meat good! Silicon bad!

Yeah an inner mental process that has been replicated - well to a (very) limited degree.

Given that you refuse to provide a definition of creativity, this is not something that you (specifically you, not the generic) are allowed to believe.

My argument is that this is exactly what is so offensive to people.

Your argument is wrong. People who are “offended” by AI don’t believe that AI is doing anything that humans are doing beyond formulaic bullshit and plagiarism.

The painful realization or disillusionment that one of the things we held most special about being human, turns out is not so special after all.

If you or a loved one has experienced said painful disillusionment, my advice would be to go outside, touch grass, i.e. experience what it means to be human, because your conception of such is lacking.

I never would have predicted that before GPT-3. I look at movies or stories with a character that is an artistic archetype differently now.

You never saw a robot in fiction, specifically the many robots that are characterised to be all but human? The ones designed to be mirrors to humanity so that we can gain perspective on exactly what makes us human? Roy Batty? Data? Bender Bending Rodriguez?

Meat good! Silicon bad!

They label those packets specifically “do not eat”, I guess you had to learn the hard way.

so your posts are all utterly wrong but they’re also not interesting enough to make fun of, so I’m just gonna make them and you disappear

also not interesting enough to make fun of

Oof, I’m out here catching strays from @self

the rest of their posts are a trip (and not a great one)

Of course! Who needs a proper definition for a measured quantity before designing an experiment? Of course! The limited scope of grading papers by English teachers, is the best possible proxy metric. Of course! Intent and agency aren't really important, after all they aren't fully scrutable from the words on a page, form is obviously the only thing that counts.Well lol I’m sure my ad-hoc suggestion for an experiment could be improved and a better definition could be found without affecting the presumed outcome.

My point is that there is a lot of anger and bias and fallacies surrounding this.

If I were really advocating for LLMs here I could point out that arguably the intense training process might smear data from individual data points (a PNG of a one dollar bill) to experiences (a general understanding of dolar-bill-ness). Bringing computers closer to the organic mind. After all LLM outputs aren’t always word for word matches.

Where this argument falls apart is that it’s clear it doesn’t work in practice yet. An LLM can get it’s output statistically reasonable, but it isn’t actually experiencing. If it had truly experienced math it wouldn’t have such a hard time answering simple math questions. If it had truly experienced the collective literature of the human race then it wouldn’t write such soulless poetry (or equivalently / alternatively soulful poetry requires more than reading stuff).

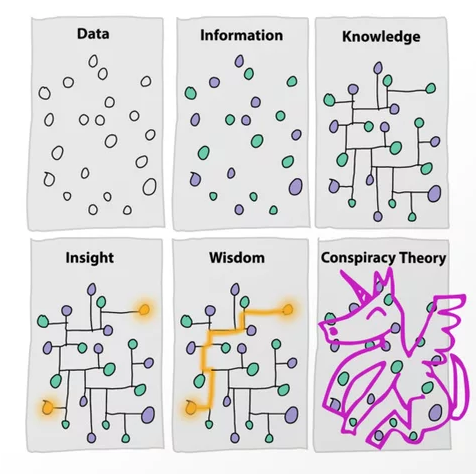

(Also gosh dang I just expressed that meme image about information, data, knowledge, wisdom, in comment form didn’t I)?

(Also gosh dang I just expressed that meme image about information, data, knowledge, wisdom, in comment form didn’t I)?

I have no clue what you’re talking about.

Many other versions listed here: https://languagelog.ldc.upenn.edu/nll/?p=52581