As suggested at this thread to general “yeah sounds cool”. Let’s see if this goes anywhere.

Original inspiration:

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

If your sneer seems higher quality than you thought, feel free to make it a post, there’s no quota here

There isn’t really a suitable awful.systems sub to put it in, but I thought I’d note here that Stonetoss got doxxed thoroughly just to increase the general good cheer and bonhomie

In February of 2021 the far-right social media platform Gab experienced a data breach resulting in the exposure of more than 70 gigabytes of Gab data, including user registration emails and hashed passwords. Like many of those on the far-right, Red Panels had a presence on Gab, so we consulted the now-public data set from the Gab exposure. We learned that the “@redpanels” account had been registered with the email hgraebener@*****.com.

womp womp

Graebener was part of an Open iT delegation to Japan in May 2019 and appeared in photos of this on the Open iT LinkedIn page. […]. During the same time, StoneToss was eager to let his fans know that he had arrived in Japan, writing on Twitter, “Finally made it to the ethnostate, fellas.”

Oh, so that’s why Japan is so damn popular on HN.

yes, that and the other thing

stonetoss

What a botched circumcision does to a mf

It appears that many of you have been hiding full blown hardout sneers from SneerClub, and I am baffled as to why

Impostor syndrome

evidently we were just too good and they were intimidated

So today I learned there are people who call themselves superforcasters®. Neat!

The superforecasters® have had a melding of the minds and determined that covid-19 was 75% likely to not be a lab leak. Nifty! This is useless to me!

Looking at the website of these people with good enough judgement to call themselves “Good Judgement”, you can learn that 100% of superforecasters® agree that there will be less than 100 deaths from H5N1 this year. I don’t know much about H5N1 but I guess that makes sense given that it’s been around since 1996 and would need a mutation to be contagious among humans.

I found one of the superforecaster®-trainee discussion topics where they reveal some of the secrets to their (super)forecasting(®)-trainee instincts

I have used “Copilot” LLM AI to point me in the right direction. And to the point of the LLM they have been trained not to give a response about conflict as they say they are trying to permote peace instead of war using the LLM.

Riveting!

Let’s go next to find out how to give up our individuality and become a certified superforecaster® hive brain.

To minimize the chance that outstanding accuracy resulted from luck rather than skill, we limited eligibility for GJP superforecaster status to those forecasters who participated in at least 50 forecasting questions during a tournament “season.”

Fans of certain shonen anime may recognize this technique as Kodoku – a deadly poison created by putting a bunch of insects in a jar until only one remains:

100 species of insects were collected, the larger ones were snakes, the smaller ones were lice, Place them inside, let them eat each other, and keep what is left of the last species. If it is a snake, it is a serpent, if it is a louse, it is a louse. Do this and kill a person.

“But what’s the catch Saturn”? I can hear you say. “Surely this is somehow a grift nerds find or a way to fleece money out of governments”.

Nonono you’ve got the completely wrong idea. Good Judgement offers a 100$ Superforecasting Fundamentals course out of the goodness of their heart I’m sure! I mean after all if they spread Superforecasting to the world then their Hari-Seldon-Esque hivemind would lose it’s competitive edge so they must not be profit motivated.

Anyway if you work for the UK they want to hear from you:

If you are a UK government entity interested in our services, contact us today.

Maybe they have superforecasted the fall of the british empire.

And to end this, because I can never resist web design sneer.

Dear programmers: if you apply the CSS

word-break: break-all;to the string “Privacy Policy” it may end up rendered as “Pr[newline]ivacy Policy” which unfortunately looks pretty unprofessional :(lmao this is one of my all time favorite grifts. I’ve never understood why it isn’t more popular among us connoisseurs. it’s so baldfaced to say “statistically, someone probably has oracular powers, and thanks to science, here they are. you need only pay us a small incense and rites fee to access them”

Imo because the whole topic of superforecasters and prediction markets is both undercriticized and kaleidoskopically preposterous in a way that makes it feel like you shouldn’t broach the topic unless you are prepared to commit to some diatribe length posting.

Which somebody should, it’s a shame there is yet no one single place you can point to and say “here’s why this thing is weird and grifty and pretend science while striclty promoted by the scientology of AI, and also there’s crypto involved”.

Isn’t it weird these people came out of internet atheism of all things and go right into this stuff?

It’s really gotta be emphasised that these guys didn’t come out of internet atheism and frankly I would really like to know where that idea came from. It’s a completely different thing which, arguably, predates internet atheism (if we read “internet atheism” as beginning in the early 2000s - but we could obviously push back that date much earlier). These guys are more or less out of Silicon Valley, Emile P Torres has coined the term “TESCREALS” (modified to “TREACLES”) for - and I had to google this even though I know all the names independently - “Transhumanism, Extropianism, Singularitarianism, Cosmism, Rationalism, Effective Altruism, and Longtermism”.

It’s a confluence of futurism cults which primarily emerged online (even on the early internet), but also in airport books by e.g. Ray Kurzweil in the 90s, and has gradually made its away into the wider culture, with EA and longtermism the now most successful outgrowths of its spores in the academy.

Whereas internet atheism kind of bottoms out in 1990s polemics against religion - nominally Christianity, but ultimately fuelled by the end of the Cold War and the West’s hunger for a new enemy (hey look over there, it’s some brown people with a weird religion) - the TREACLES “cluster of ideologies” (I prefer “genealogy”, because this is ultimately about a political genealogy) has deep roots in the weirdest end of libertarian economics/philosophy and rabid anti-communism. And therefore the Cold War (and even pre-Cold War) need for a capitalist political religion. OK the last part is my opinion, but (a) I think it stands up, and (b) it explains the clearly deeply felt need for a techno-religion which justifies the most insane shit as long as there’s money in it.

Yeah, I hung out a lot in Internet skeptic/atheist circles during the 2005-10 era, and as far as I can recall, the overlap with LessWrong, Overcoming Bias, etc., was pretty much nil. This was how that world treated Ray Kurzweil.

I read

Most of it was exactly like the example above: Kurzweil tosses a bunch of things into a graph, shows a curve that goes upward, and gets all misty-eyed and spiritual over our Bold Future. Some places it’s OK, when he’s actually looking at something measurable, like processor speed over time. In other places, where he puts bacteria and monkeys on the Y-axis and pontificates about the future of evolution, it’s absurd. I am completely baffled by Kurzweil’s popularity, and in particular the respect he gets in some circles, since his claims simply do not hold up to even casually critical examination.

and immediately thought someone should introduce PZ Meyers to rat/EA as soon as possible.

Turns out he’s aware of them since at least 2016:

I’m afraid they are. Google sponsored a conference on “Effective Altruism”, which seems to be a code phrase designed to attract technoloons who think science fiction is reality, so the big worries we ought to have aren’t poverty or climate change or pandemics now, but rather, the danger of killer robots in the 25th century. They are very concerned about something they’ve labeled “existential risk”, which means we should be more concerned about they hypothetical existence of gigantic numbers of potential humans than about mere billions of people now. You have to believe them! They use math!

More recently, it seems that as an evolutionary biologist he apparently has thoughts on the rat concept of genetics: The eugenicists are always oozing out of the woodwork

FWiW I used to read PZM quite a bit before he pivoted to doing youtube videos which I don’t have the patience for, and he checked out of the new atheist movement (such as it was) pretty much as soon as it became evident that it was gradually turning into a safe space for islamophobia and misogyny.

PZ is aware and thinks they’re bozos. As a biologist, he was particularly pointed about cryonics.

Technoloons is a good word, going to have to remember that

I wonder if the existence of RationalWiki contributes to the confusion. Even though it’s unrelated to and critical towards TREACLES, the name can cause confusion.

I know what you’re thinking.

You’re thinking Saturn, that could have been a post!

I know I know, but I can’t handle that kind of pressure. If someone else wants to make a post about this, or prediction markets, don’t let me stop you. It’s an under-sneered area at the intersection of tech weirdos, that other kind of tech weirdoes, and that third kind of tech weirdos.

If you have ever wondered why so many Rationalists do weird end of year predictions and keep stats on that, it is because they all want to become superforecasters. (And remember by correctly forecasting trivial things that are sure to happen, you can increase your % of correct forecasts and you can become a superforecaster yourself. Never try to forecast black swans for that reason however (or just predict they will not happen for more superforecastpoints)).

See also you could have been a winner! And got a free sub to ACX!

this is what literary Bayesianism promises: the ability to pluck numbers out your ass and announce them in a confident voice

Fans of certain shonen anime may recognize this technique as Kodoku – a deadly poison created by putting a bunch of insects in a jar until only one remains

I understood this reference. I know it as Gu poison, which is listed in the wikipedia article you linked!

To minimize the chance that outstanding accuracy resulted from luck rather than skill, we limited eligibility for GJP superforecaster status to those forecasters who participated in at least 50 forecasting questions during a tournament “season.”

When I was a kid I read a vignette of a guy trying to scam people into thinking he was amazing at predicting things. He chose 1024 stockbrokers, picked one stock, and in 512 envelopes he said the stock would be up by the end of the month, and in the other 512 he said it would go down. You can see where this story is going, i.e. he would be left with one person thinking he predicted 10 things in a row correctly and was therefore a superforecaster. This vignette was great at illustrating to child me that predicting things correctly isn’t necessarily some display of great intelligence or insight. Unfortunately what I didn’t know is that it was setting me up for great disappointment when after that point and forevermore, I would see time and time again that people would fall for this shit so easily.

(For some reason when I try to think of where I read that vignette, vonnegut comes to mind. I doubt it was him.)

“He chose 1024 stockbrokers, picked one stock, and in 512 envelopes he said the stock would be up by the end of the month, and in the other 512 he said it would go down.”

1024 stamps?

This guy is clearly already made of money so why is he even bothering.

/s

Stocks and stamps, 2 tastes that have gone great together since Charles Ponzi.

Is that from a cult classic I may not have run into? Because just the presence of Orbital in the background there has piqued my curiousity

Mean Girls! (the original)

Thanks, got a weekend watch then :)

I grabbed a book on the fermi paradox from the university library and it turned out to be full of Bolstrom and Sandberg x-risk stuff. I can’t even enjoy nerd things anymore.

it’s the actual fucking worst when the topics you’re researching get popular in TESCREAL circles, because all of the accessible sources past that point have a chance of being cult nonsense that wastes your time

I’ve been designing some hardware that speaks lambda calculus as a hobby project, and it’s frustrating when a lot of the research I’m reading for this is either thinly-veiled cult shit, a grift for grant dollars, or (most often) both. I’ve had to develop a mental filter to stop wasting my time on nonsensical sources:

- do they make weird claims about Kolmogorov complexity? if so, they’ve been ingesting Ilya’s nonsense about LLMs being Kolmogorov complexity reducers and they’re trying to use a low Kolmogorov complexity lambda calculus representation to implement their machine god. discard this source.

- do they cite a bunch of AI researchers, either modern or pre-winter? lambda calculus, lisp, and functional programming in general have a long history of being treated as the magic that’ll enable the machine god by AI researchers, and this is the exact low quality shit research that led to the AI winter in the first place. discard this source.

- at any point do they casually claim that the Church-Turing correspondence has been disproven or that a lambda calculus machine is superturing? throw that crank shit in the trash where it belongs.

I think the worst part is having to emphasize that I’m not with these cult assholes when I occasionally talk about my hobby work — I’m not in it to make the revolutionary machine that’ll destroy the Turing orthodoxy or implement anyone’s machine god. what I’m making most likely won’t even be efficient for basic algorithms. the reason why I’m drawn to this work is because it’s fun to implement a machine whose language is a representation of pure math (that can easily be built up into an ML-like assembly language with not much tooling), and I really like how that representation lends itself to an HDL implementation.

deleted by creator

oh absolutely! I get too much exposure to the crank side of all of those topics from my family, so I can definitely relate. now I’m flashing back to the last couple of times my mom learned the artificial sweetener I use is killing me (from the same discredited source every time; they make the “discovery” that a new artificial sweetener causes cancer every few years) and came over specifically to try and convince me to throw out the whole bag

deleted by creator

that too! processed sugar was the devil too, as if granulizing cane sugar imbued it with the essence of evil. she also claimed they used bleach to make white refined sugar? I think the end goal was to get me to reject the idea of flavor. joke’s on that lady, my cooking is both much better than hers and absolutely terrible for you

Oh boy, I have thoughts about Kolmogorov complexity. I might actually write a section in my textbook-in-progress to explain why it can’t do what LessWrongers want it to.

A silly thought I had the other day: If you allow your Universal Turing Machine to have enough states, you could totally set it up so that if the first symbol it reads is “0”, it outputs the full text of The Master and Margarita in UNICODE, whereas if it reads “1”, it goes on to read the tuples specifying another TM and operates as usual. More generally, you could take any 2^N - 1 arbitrarily long strings, assign each one an N-bit abbreviation, and have the UTM spit out the string with the given abbreviation if the first N bits on the tape are not all zeros.

You could use the recent-ish Junferno video about Turing machines to demonstrate that point as well

doing work that’s not trying to free us from the tyranny of century-old mathematical formulations? how dare you! burn the witch!

(/s, of course! also your hardware calculus project sounds like a nicer time than my batshit idea (I want to make a fluidic processor… someday…))

I want to make a fluidic processor… someday…

fuck yeah! this sounds like the kind of thing that’d be incredibly beautiful if done on the macro scale (if that’s possible) — I love computational art projects that clearly show their mechanism of action. it’s unfortunate that a majority of hardware designers have a “what’s the point of this, it’s not generating value for shareholders” attitude, because that’s the point! I will make a uniquely beautiful computing machine and it won’t have any residual value any capitalist assholes can extract other than the beauty!

if I ever finish this thing, I should make a coprocessor that can trace its closure lists live as it reduces lambda calculus terms and render them as fractal art to a screen. I think that’d be fun to watch.

Yep. I love beautiful machines with beautiful action in the same way.

One of my favourites I’ve seen was a clock with a tilt table, switchback running tracks running widthwise across that table, and switches by the track ends. A small ball would run across the track for 60s until it hits the switch, which would cause a lever system to flip the orientation of the tilt table (starting the ball movement the other way).

Saw it in the one London collection of typically-stolen antiquities, I don’t recall the origin of it.

For the processor: yep, something larger is the intent, but I think I’d have to start with a model scale first just to suss out some practical problems. And then when scaling it, other problems. God knows if I’d want to make this “you can walk in it” scale, but I’ll see 😅

lisp (…) [has] a long history of being treated as the magic that’ll enable the machine god

Nonsense, we all know the robot god will be hacked together in Perl.

deleted by creator

petition moving it to the scifantasy archives section

My first mini sneer. Hope it fits the criteria.

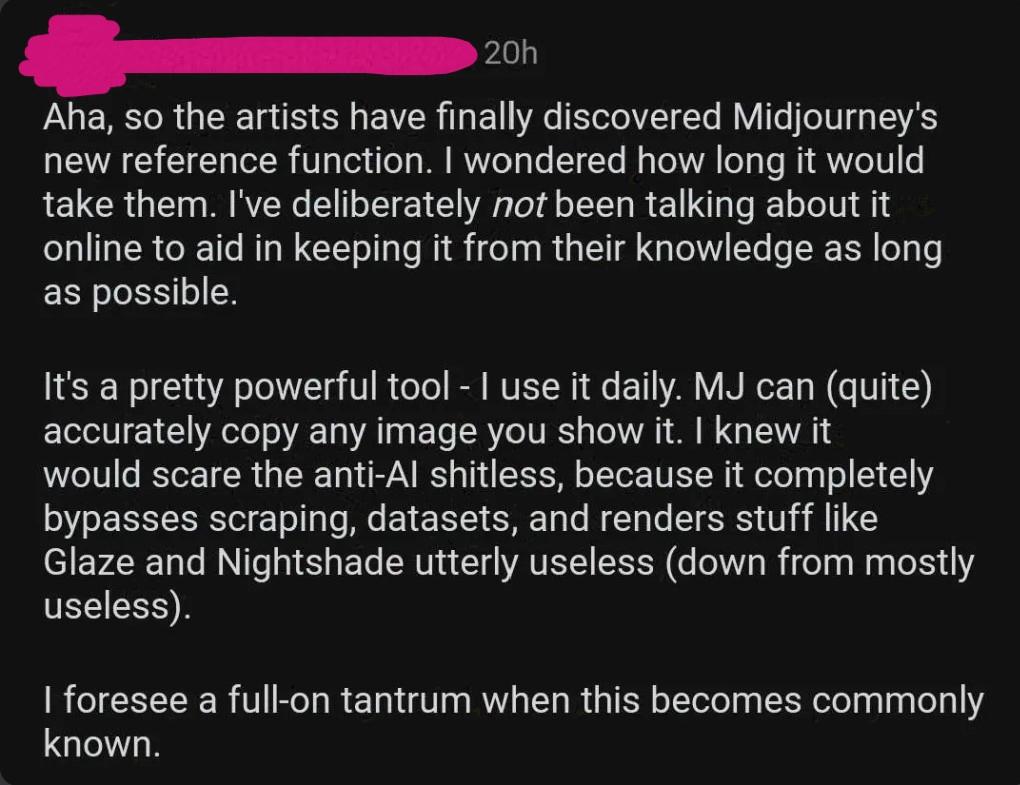

I frequent some (very AI-critical) art spaces, and every now and then we get some trolls who act like literal anime villains, complete with evil plans and revenge plots, but unfortunately without cool villain laughs.

I always wonder if those bozos all were stuffed into a trashcan by a gang of delinquent artists in high school, judging from the absolute hate-boner they seem to have.

I’ve deliberately not been talking about it online to aid in keeping it from their knowledge as long as possible.

Not sure if he knows that not all artists live in caves and make cave paintings. And even those who do probably have a smart phone with them, for better or worse. So I’m afraid his nefarious plan doesn’t quite work out.

I knew it would scare the anti-Al shitless, because it completely bypasses scraping, datasets […].

Shaking in my chair over here, but I still don’t understand how this negates the needs for scraping and datasets. Just because I can attach a reference image to my prompt doesn’t mean the waifu generator can suddenly operate without training data.

I foresee a full-on tantrum when this becomes commonly known.

I mean, it’s not like Midjourney put out a big-ass announcement for that feature or anything. It’s totally a secret that only an elite circle knows about.

this is just an increasingly desperate Seto Kaiba taking to the internet because yu-gi-boy pointed out his AI-generated Duel Monsters deck does not have the heart of the cards, mostly because the LLM doesn’t understand probability, but he’s in too deep with the Kaiba Corp board to admit it

“Screw the art, I have LLMs!”

no regerts - from the SomethingAwful meme stock lols thread

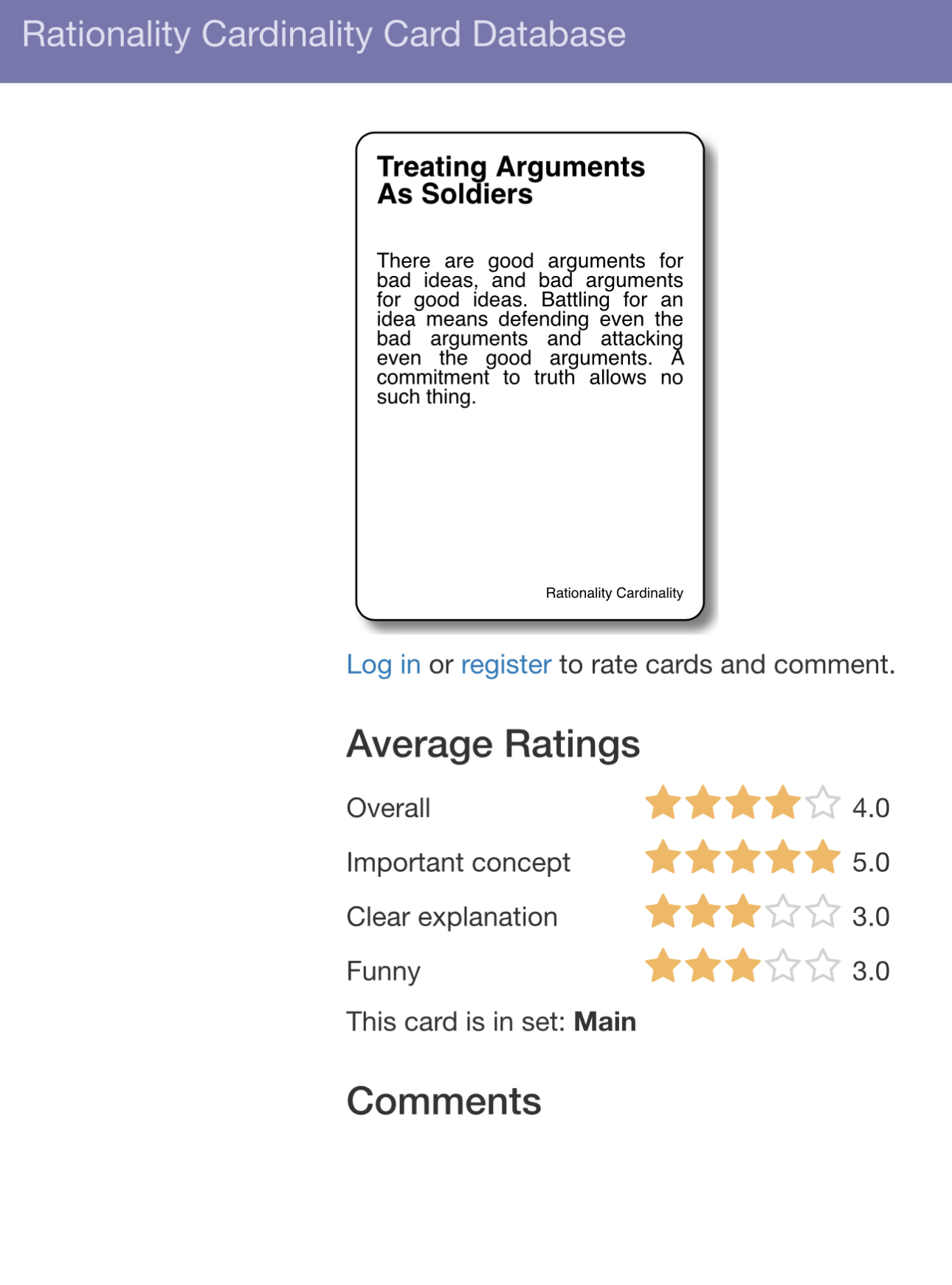

I learned about this because someone dragged a copy to aella’s birthday orgy and it showed up in one of the photos, but the rationalists have a cards against humanity clone and it looks godawful

imagine someone pulls this out and you have no idea what it is. you’re kind of nervous and weirded out by the energy at this orgy but at least this will distract you. you look at your first card and it has a yudkowsky quote on it

one of the data fluffers solemnly logs me as “did not finish” as I flee the orgy

Raise the Sanity Waterline

We all wish our friends would be more rational, especially when they disagree with us. But actually helping them can be difficult, especially when already in an argument. Rationality Cardinality will help you teach your friends how to think more clearly, by introducing them to concepts in a fun and memorable way.

Well, it will make your friends more Rationalist but not in the way they hope.

every card is a valuable lesson in how insufferable the Rationalists are

Yep that’s just as many WPC (words per card) that I was expecting

Jesus wept, that one deserves a thread of its own. I can’t remember the last time I winced this hard.

I propose a SneerClub game night where all we do is play rounds of this thing until we can’t handle any more of it

- puked on a fluffer

Having that much liquor on hold may need to be its own budget line item

dear fuck I found their card database, which doesn’t seem to be linked from their main page (and which managed to crash its tab as soon as I clicked on the link to see all the cards spread out, because lazy loading isn’t real):

e: somehow the cards get less funny the higher the funny rating goes

e2: there’s no punchability rating but it’s desperately needed

Only cards that have incorrect punctuation should be considered funny, this is a clear violation of Rat Law. Big Yud will hear of this.

instantly tries to connect to port 3000 on load too, heh. looks like a diyolo socket.io implementation, badly done

is it truly that hard for people to configure websocket upgrades under a known url on their primary hosting endpoint

now you may think rationalists know anything about technology

new basilisk defense: only speak to it in websockets

• came in a deck of cards

Incredible, they just use the limerick that appears in the “Exaggeration and distortion of mental changes” section of Phineas Gage’s wikipedia article, uncritically.

Now I really wonder how much EA donation money went into making this.

I just found out that there are Dominican Republic supremacists? Like, the latest thing on xitter is making the DR out to be Caucasian Haiti. It’s some especial pol-brained nonsense about how the DR is successful because it’s a white country, even though they’re all very clearly AT LEAST lightskinned? It’s an arguement about a country that only works if you’ve never seen the country or its people.

This one dude in particular namesearchs Haiti and spams the replies with as many white(ish) Dominicans as he can, along with the typical rants and graphs and then if he gets dunked in the quotes or the replies he just ritual posts the same 5 tiktoks of the same lightskinned Dominicans and says that all the darkskins are just Haitians. The worst part is that it works. His posting completely smothers any tweet disagreeing because he’s paying Elon 58 dominican pesos a month to LARP as measurehead on pay to win 4chan.

I’m not all that courant about the DR, but re: Haiti the “Revolutions podcast” has a long series about the Haitian revolution, and it’s super interesting. It’s clear to me that Haiti paid the price of being the first Black republic to gain independence.

Edit guess what historical figure Wikipedia is most interested in?

https://gerikson.com/m/2022/11/index.html#2022-11-17_thursday_03

deleted by creator

Unsure if this meets even the lowest bar for this thread but I was jumpscared by Aella while browsing Reddit

(raised hand of disapproval) slut-shaming

(pointing finger of approval) Substack-shaming

i expect she was looking for something to be more famous for than not washing

Oh my Glob that’s on her Wikipedia page

thankfully the Spreadsheet Orgy is not

it made Know Your Meme tho

The spread of the parody chart led to jokes about what “came in a fluffer” could mean in a business context. For example, on March 4th, Martin Shkreli joked

Fuck this, launching myself out of the Universe

deleted by creator

No, for good or ill.

(I forget where I learned about that list, but it makes for interesting reading.)

keep in mind that it is not a comprehensive list of sources, it’s a catalogue of querulous wikipedia arguments

Ah, a minutes of wikipedia meetings

And I was so happy we had resisted talking about the graph/incident directly (here is the incident indirectly). My opinion remains a bit like, wow that is weird but good for her, and good to see they took safety seriously.

i will never forgive aella for giving good and wholesome birthday orgies a bad name

Aella : birthday orgies :: Yudkowsky : fanfiction

deleted by creator

Mom: We have Eyes Wide Shut style orgies at home

people are laughing because some people came in the fluffer, but I’m just thinking who logged all this shit? There was one person here who didn’t participate but just had a clipboard and logged everybodies actions. The orgies middle manager.

Out of sheer fascination, I read her post. The actual answer is that they surveyed all the men after and logged their replies.

I’m sorry, it was never the intention of my post to make you go through that.

@drislands @Soyweiser I wonder what the error bars for self-reporting bias looked like.

Porn shoots have support crews too, but as per another reply it turns out they barely even bothered doing this themselves

My post was more a reaction to the know your meme people mocking the people who came in fluffers (which I found weird) than a real thing tbh.

ah, now I got that reference

let’s see if it’s something that continues to grow and grow so in 1 month or so people I know will try to explain it to me and I will have to pretend I’ve known all about it, because I am Terminally Online

Nah this thing has come and gone.

To be fair, it was beautiful data. This is definitely the first time that reading about a gangbang made me want to subscribe to canva dot com.

What the fucking fuck

There are some rather notable layers to that snippet of interaction, holy shit

I found this article last week about AI bullshit written in 1985 by Tom Athanasiou and published in the, also new to me, Processed World zine.

The world of artificial intelligence can be divided up a lot of different ways, but the most obvious split is between researchers interested in being god and researchers interested in being rich. The members of the first group, the AI "scientists,‘’ lend the discipline its special charm. They want to study intelligence, both human and "pure’’ by simulating it on machines. But it’s the ethos of the second group, the "engineers,‘’ that dominates today’s AI establishment. It’s their accomplishments that have allowed AI to shed its reputation as a "scientific con game’’ (Business Week) and to become as it was recently described in Fortune magazine, the "biggest technology craze since genetic engineering.‘’

The engineers like to bask in the reflected glory of the AI scientists, but they tend to be practical men, well-schooled in the priorities of economic society. They too worship at the church of machine intelligence, but only on Sundays. During the week, they work the rich lodes of "expert systems’’ technology, building systems without claims to consciousness, but able to simulate human skills in economically significant, knowledge-based occupations (The AI market is now expected to reach $2.8 billion by 1990. AI stocks are growing at an annual rate of 30@5).

https://processedworld.com/Issues/issue13/i13mindgames.htm

All Processed World issues on archive.org https://archive.org/search?query=creator%3A"Processed+World+Collective"&and[]=mediatype%3A"texts"

and the official Processed World site with html archive https://processedworld.com

PW was a doctrinaire commie paper pretending to be punk rock - a bit Christian rock - but it has its moments.

I’ve been going through the issues and I’ve found so far that Tom’s works are the standouts

deleted by creator

goatse is acausal

deleted by creator

something something a cause hole

Sponsored by Big Cat Food!

#3 is “Write with AI: The leading paid newsletter on how to turn ChatGPT and other AI platforms into your own personal Digital Writing Assistant.”

and #12 is “RichardGage911: timely & crucial explosive 9/11 WTC evidence & educational info”

Congratulations to Aella for reaching the top of the bottom. Also random side thought, why do guys still simp in her replies? Why didn’t they just sign up for her birthday gangbang?

oh that is damning

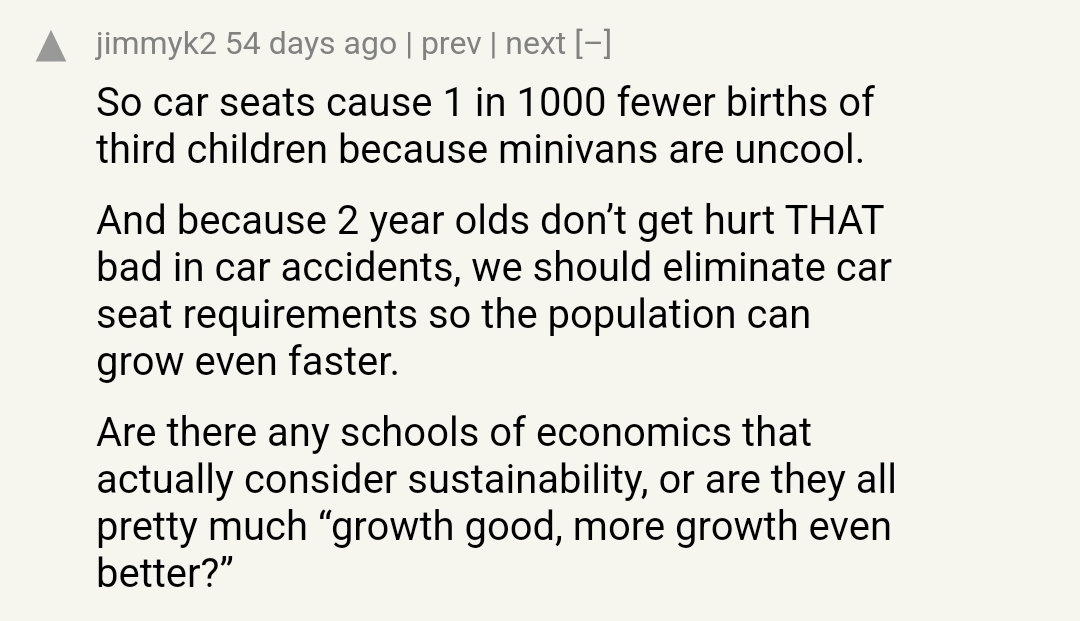

Here that car seat post I was talking about

The best sneer is from the comments imo

Unfortunately, the genie is out of the bottle here. It would be political malpractice to liberalize these safety rules. The first child who dies or is critically injured after eliminating the post two year old requirements is a political disaster for whoever changed the rules

deleted by creator

deleted by creator

Give people hope for the future. Climate change takes away people’s hope, so solve climate change to give them their hope back.

Can’t argue with that. Why don’t we just get on solving climate change, eh?

Edit: found the HN discussion, not positive: https://news.ycombinator.com/item?id=38709653

This might be sacrilege, but HN sneers are my favorite sneers. Especially because snark is a dang-able offence

To be scrupulously fair, it’s perfectly possible to construct vehicles that are both good at transporting multiple people and don’t take up square miles of road. See the vehicle market in SE Asia as an example.

Or this segment, almost unknown in the US: https://en.wikipedia.org/wiki/Compact_MPV

In fact, if more vehicles like this were available, it would be good both for Quiverful families and Earth-huggers! Alas the pickup truck will enable us to drive, one by one, to the Apocalypse.

of fucking course it’s a Zvi post

10k words, checks out

Undisputable Champion of “Well ackshually”

I didn’t realize he was namebrand

my man never should have stopped turning dragons sideways

I’m doing a reading of good fan-fiction at a con this weekend, to counter the many “bad fanfic reading” panels. I want to read an interesting passage from HPMoR

this is almost definitely how bible reading groups displaced much more interesting activities like drinking a fuckton of mead and reading poetry

deleted by creator

I wonder how many people attended and how many laughed.

these passages have not improved with time

convincing you that something that seems evil is the right thing to do

y tho

That’s a great thread name!

had a feeling you’d like it in particular

Thank the acausal robot god for this thread, I can finally truly unleash my pettiness. Would anybody like to sneer at the rat tradition of giving everything overly grandiose names?

“500 Million, But Not A Single One More” has always annoyed me because of the redundancy of “A Single One.” Just say Not One More! Fuck! Definitely trying to reach their title word count quota with that one.

The Zvi post that @slopjockey@slopjockey@awful.systems linked here is titled “On Car Seats as Contraception | Or: Against Car Seat Laws At Least Beyond Age 2” which is just… so god damn long for no reason. C’mon guys - if you want to use two titles, just use one. If you want to use two titles, just use one.

Then there’s the whole slew of titles that get snowcloned from famous papers like how “Attention is all you need” spurred a bunch of “X is all you need” blog posts.

Here’s a card for the CAH thing @self posted

Snowclone Titles Considered Harmful; or: __________

How I Learned to Stop Worrying and Love the ___

Sequences

This repetitive, tautological, meaningless, tautologous, redundant tautology really gets my goat!

Car Seats as Contraception

At first I thought this was about how bench seats have just about vanished.